What does F = ma have to do with “Entropy”?

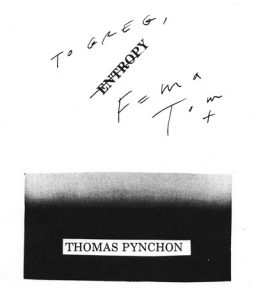

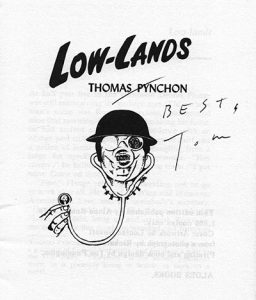

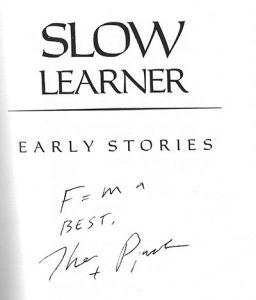

Among the books owned by the late UNIX pioneer Greg Chesson are two signed copies of Pynchon’s story “Entropy,” a bootleg edition and a first edition of the collection Slow Learner. In both copies, Pynchon did something unusual: along with his signature, he inscribed the equation for Newton’s second law of motion, F = ma, i.e., force (F) equals mass (m) times acceleration (a). In the bootleg edition, Pynchon went even further. Rather than cross out his printed name above his autograph, which authors sometimes do to emphasize their more personal signature (see the Low-Lands autograph below), Pynchon instead crossed out the word “Entropy” and wrote the equation beneath it.

- “Entropy” Bootleg Pamphlet

- “Low-Lands” Bootleg Pamphlet

- Slow Learner

Why would Pynchon write down Newton’s second law on a copy of a story about the second law of thermodynamics? One possibility is that this is a bit of an inside joke and a note of encouragement to a friend – hence the crossing out of the title and its replacement with F = ma in the bootleg copy. Intuitively, entropy suggests dissolution, a system that’s running down. Pynchon’s story muses on the negative consequences of the inexorable increase in entropy: disorder, death, and the ultimate end of the universe. F = ma suggests the opposite – a positive force, acceleration rather than loss of motion, an ability to act to alter the world, rather than simply let things run their course.

This positive power to act decisively in the face of disorder is embodied by two characters in “Entropy”, Meatball Mulligan and Aubade, who make crucial decisions to change the systems of which they are a part. Meatball Mulligan restores order and momentum to his lease-breaking party, which had reached its third day and was running down. Aubade smashes the window of Callisto’s hermetically sealed apartment, upsetting its thermal equilibrium with a rush of cold spring air. Force as a metaphor also becomes explicit later in Pynchon’s work, with the Counterforce as a political opposition that stands against the sinister forces of war and greed. Chesson, with his technical background, would likely have recognized the equation for Newton’s second law, and understood its implications.

However, this popular sense that entropy and force are opposites, that entropy suggests something negative and passive, while force is positive or active, is technically not correct. Newton’s second law is in fact integral to one definition of entropy. Recognizing this, Pynchon’s inscription of “F = ma” has another, more ironic, interpretation. To make this interpretation clear, we have to first understand the relationship between F = ma and the concept of entropy as defined in the branch of physics called statistical mechanics.

Classical Thermodynamic Entropy

While Pynchon has warned us to “not underestimate the shallowness of my understanding” of entropy (Introduction, Slow Learner), his comments in the introduction to Slow Learner and passages in the story itself show that Pynchon is well aware of the statistical mechanical version of entropy, and how it relates to other versions, especially the first, classical thermodynamic definition. As Pynchon notes in his Slow Learner introduction, the idea of entropy was first developed by the 19th century physicist Rudolf Clausius, who built on earlier ideas of the French engineer Sadi Carnot. Carnot and Clausius were both trying to understand how heat energy is transformed into useful work, such as when steam drives a piston in an engine. Clausius defined entropy as a measure of the capacity of heat energy to be usefully transformed into work.

More broadly, this classical definition of entropy is about irreversibility. Heat spontaneously flows from something hot to something cold; as it does so, heat can do useful work, like power a steam engine. But the reverse is never true – your forgotten, lukewarm cup of coffee never absorbs the ambient heat of air to become hot again. This classical notion of entropy as capturing the irreversibility of heat flows in the universe is represented in “Entropy” by Callisto’s storyline, who lives in a hermetically sealed apartment and vainly tries to revive a dying bird with his body heat.

Entropy in Statistical Mechanics

One important limit to Clausius’ classical concept of entropy is that it is purely macroscopic: it is a measure of the irreversible changes in the universe, but it doesn’t really explain why these changes are irreversible. The world around us consists of atoms; any satisfying explanation of why your cold coffee doesn’t spontaneously get hot should refer to the behavior of those atoms. Enter statistical mechanics – a branch of physics developed in the late 19th century by three physicists that Pynchon repeatedly refers to in his work, Boltzmann, Gibbs, and Maxwell. Maxwell turns up in the Slow Learner introduction and The Crying of Lot 49, while Boltzmann and Gibbs are specifically mentioned in “Entropy.” Clearly, Pynchon knows of their work.

The goal of these physicists as they developed statistical mechanics was to explain the macroscopic phenomena of the world in terms of the microscopic jostling of atoms. Rather than working with the bulk thermodynamic properties that Clausius was concerned with, like heat and temperature, statistical mechanics explains things in terms of the velocity and mass of individual atoms. From this comes the well-known idea of entropy as a measure of disorder. Why does your coffee cup cool down to room temperature? Clausius would say that this happens because heat irreversibly flows from a hot object a cooler one. Boltzmann, however, would explain it as the inevitable result of atoms moving from a less probable, more ordered state, to a more probable, disordered one. A hot cup of coffee in a cool room is in one sense a more ordered state: higher energy, rapidly moving molecules are confined to the small volume of the coffee cup, while lower energy air molecules are bouncing around in the much larger volume outside the cup. Over time, as these molecules bounce around, the whole system reaches a much more probable state in which the energy of all molecules in the coffee, cup, and room air is much more equally distributed.

Callisto had this in mind when he spoke about the so-called heat death of the universe, that time when coffee cups and everything else in the universe have equilibrated to a lifeless, uniform state:

He had known all along, of course, that nothing but a theoretical engine or system ever runs at 100% efficiency; and about the theorem of Clausius, which states that the entropy of an isolated system always continually increases. It was not, however, until Gibbs and Boltzmann brought to this principle the methods of statistical mechanics that the horrible significance of it all dawned on him: only then did he realize that the isolated system – galaxy, engine, human being, culture, whatever – must evolve spontaneously toward the Condition of the More Probable.”

Newton’s Second Law and Entropy

We’re now ready to get back to our original question – what does F = ma have to do with entropy? Here’s the answer: In statistical mechanics, the molecules of a system bounce and jostle around in accordance with Newton’s second law of motion, and such a system inevitably evolves toward a more disordered, more probable state. In other words, set up a system of molecules that obey the rule F = ma, and you automatically get a spontaneous increase in entropy. We won’t get into the math here, but this is essentially how Boltzmann, Gibbs, and Maxwell approached the concept of entropy.

This statistical mechanical definition of entropy is represented by Meatball Mulligan’s storyline: as his lease breaking party progresses, the movements and actions of objects and individuals gradually moves toward a more disordered state. To complete the metaphor, Meatball and his guests are like molecules bouncing around, obeying F = ma, and evolving spontaneously toward a more disordered state.

So why did Pynchon inscribe “Entropy” with F = ma?

When Pynchon inscribed F = ma in Greg Chesson’s copies of “Entropy,” perhaps he was offering a more ironic take: we think we’re in control of our lives, but really, most of the time we’re just particles in the system, subject to forces we can’t control, inevitably and irreversibly moving toward our final disordered state.

***

Mike White is an Assistant Professor of Genetics at the Washington University School of Medicine in St. Louis, a contributing science writer at Pacific Standard, a science blogger at The Finch & Pea, and an unrepentant Pynchon fan.

F=ma, where “ma” in English is a synonym for “mother” and Pynchon’s mother, Katherine Frances Bennett, descended from prominent Irish men and women. My hypothesis is that as much in Pynchon derives from his Irish forebearers and from James Joyce (as literary forebearer) as does that from his Puritan forebearers, William Pynchon,1630, &c, FWIW. For Joyce (and Pynchon) a mother (her love, one’s love for her) is a force: “AMOR MATRIS, subjective and objective genitive, may be the only true thing in life.” (Ulysses 9.843-4)